Teleoperations era of software

Partial Autonomy Software: Software 2.5

Software Synthesis analyses the evolution of software companies in the age of AI - from how they're built and scaled, to how they go to market and create enduring value. You can reach me at akash@earlybird.com.

London AI Breakfast Series

The recent AI GTM session was the first of a recurring series of London AI breakfasts.

The next event, focused on the MCP ecosystem, is full, but there are more events in the pipeline, starting with the 16th July breakfast on Computer Use and Browser Agents. If you’re a founder or operator building here, sign up as spots are limited.

Andrej Karpathy’s talk on Software 3.0 at YC AI Startup School made waves this week, and rightly so.

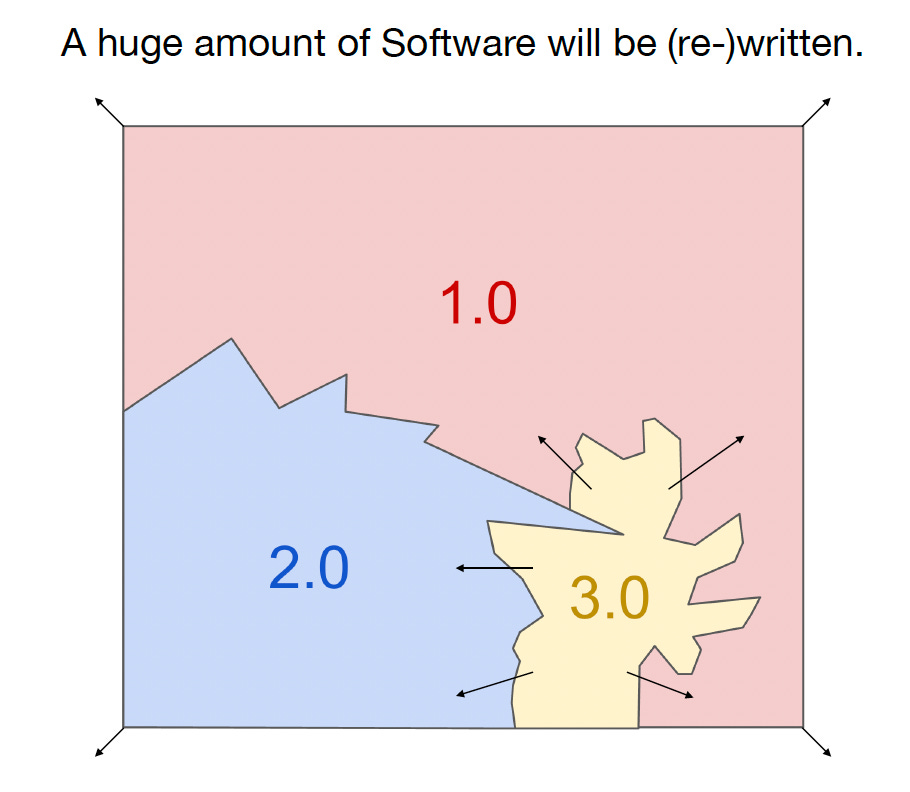

Building on his 2017 ‘Software 2.0’ essay, Andrej describes how software written in English is eating Software 1.0 and 2.0.

He goes on to characterise LLMs as ‘people spirits’, exhibiting a kind of emergent psychology. As we all know, LLMs’ savant-like mastery of human knowledge comes with the drawbacks of hallucination, jagged intelligence, a lack of memory, and sycophancy.

Ergo, proclamations of ubiquitous agents being imminent are wide of the mark.

Andrej recites how a Waymo ride in 2013 had him convinced that fully autonomous vehicles were not far away, yet 12 years later we’re still at the infancy of the AV industry.

The arc of agent autonomy may follow a similar timeline, despite the dizzying rate of improvement we’re seeing.

Graduating from L1 to L5 in self-driving is as much of a data problem as graduating from vibe-coding personal websites is to agents building production-ready code for critical systems.

As autonomy increases, so does trust for higher-stakes tasks.

A friend described his first experience in a Waymo as:

My first experience in a Waymo was not scary. It was because it has a screen that shows you what the car sees. When you look out onto the street, if the real world matches what that screen tells you, you feel safe because you know the car’s model of the world has taken into account your very specific circumstance at that point in time. It does this all the time, which builds confidence in its decision-making

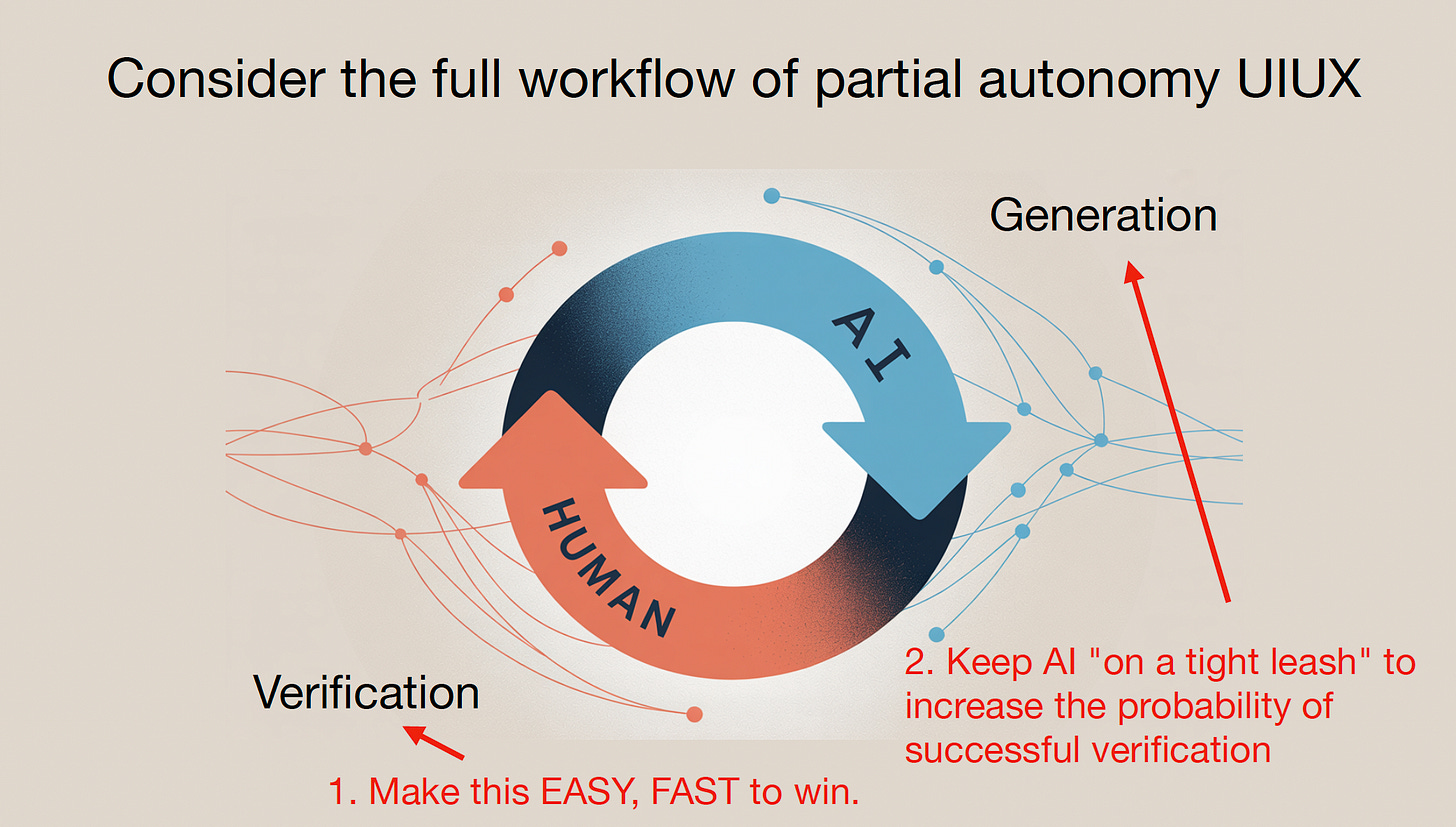

It feels like in building this software 2.5 (until we get to fully autonmous agents) part of the experience of making agents successful and take over more critical work will centre around us getting comfortable with delegating more and more tasks to it, and witnessing them get these right.

Much like the AV and robotics industries have built tele-operations tooling to deploy their products and spur adoption, the next decade will see agent builders design software that is partially autonomous.

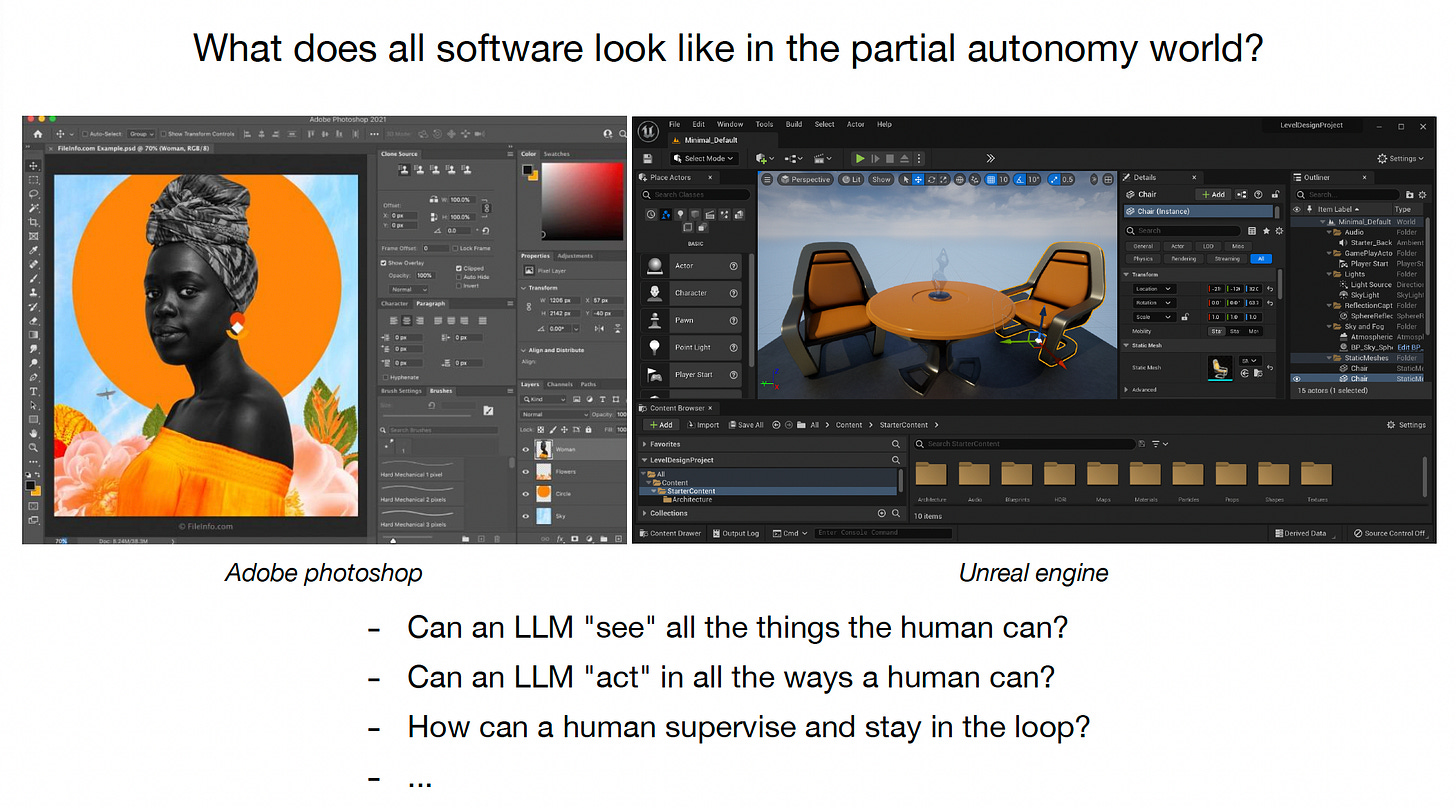

For LLMs to optimally see all the things humans can, we need to rethink the way the internet is currently designed.

The demand-side explosion of agent builders will eventually result in the supply-side of service providers optimising for agent consumption - the era of agent experience arrives.

llms.txt files and documentation written in markdown are just the start.

Here’s Daytona CEO Ivan Burazin on how they’ve been rearchitecting their product for agent consumption:

We asked: if an agent is the end user, what does it actually need from a compute environment?

The first answer is obvious: everything must be API-driven. Spin up a machine, archive it, delete it, all through endpoints. That’s standard.

Inside each environment, agents don’t need UIs or keyboards. They need headless tooling: file explorers, shell access, LSP support, git clients, all available through structured, predictable APIs.

For LLMs to act as effectively as humans can, humans will still need to be in the loop for many years to come.

Andrej likened this to Tony Stark’s Iron Man suit which was capable of rotating between autonomy and augmentation based on the circumstances. The analogy holds up pretty well.

Agent builders developing their own evaluations tooling are focusing on this exact human-machine symbiosis: scalable pipelines for human data collection and human-in-the-loop infrastructure.

Considerations would include:

Verification Interfaces: What are effective ways for humans to quickly audit and correct AI outputs?

Error Handling and Recovery: How does the user easily correct the agent ("Undo" for AI actions)? Snap-back & versioning – every agent action is a reversible commit; UX makes “undo/rewind” a first-class control.

Context Scaffolding: How can the UI make it easier for users to provide the right context to the agent?

Progressive permissioning – the agent asks for scopes just-in-time (“may I read your inbox to schedule?”).

Confidence-weighted affordances – buttons brighten only when the model’s self-reported certainty or eval score clears a threshold; below that you fall back to human confirmation.

Instrument-panel overlay – Tesla’s Autopilot shows what the net “sees.” LLM products can surface parse trees, citations, or tool-call traces to earn trust at higher autonomy settings.

Let’s look at some examples in the wild.

Devin released an updated version (2.1) that uses 🟢 🟡 🔴 for confidence scores and waits for user approval when the confidence score isn’t high.

Sierra acknowledges that the best deployments of their customer support agents have:

Fully-aligned customer experience and technology teams who are working closely to monitor those insights on a daily basis. Tight-knit collaboration creates an environment designed for continuous improvement and speed. For example, CX teams will review conversations for quality assurance while technical teams debug integrations and provision APIs.

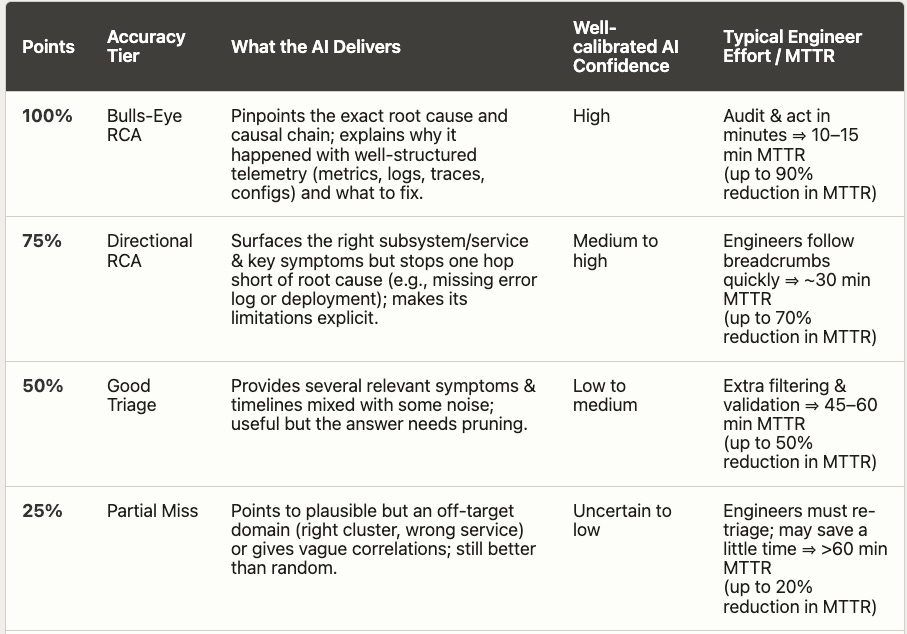

Traversal uses a rubric to convey the accuracy tiers of its AI SRE’s RCA, with different confidence weights.

It may not take 10 years, but we’re still a long time away from full autonomy - designing the right human-machine symbiosis will create huge value in the interim.

Data

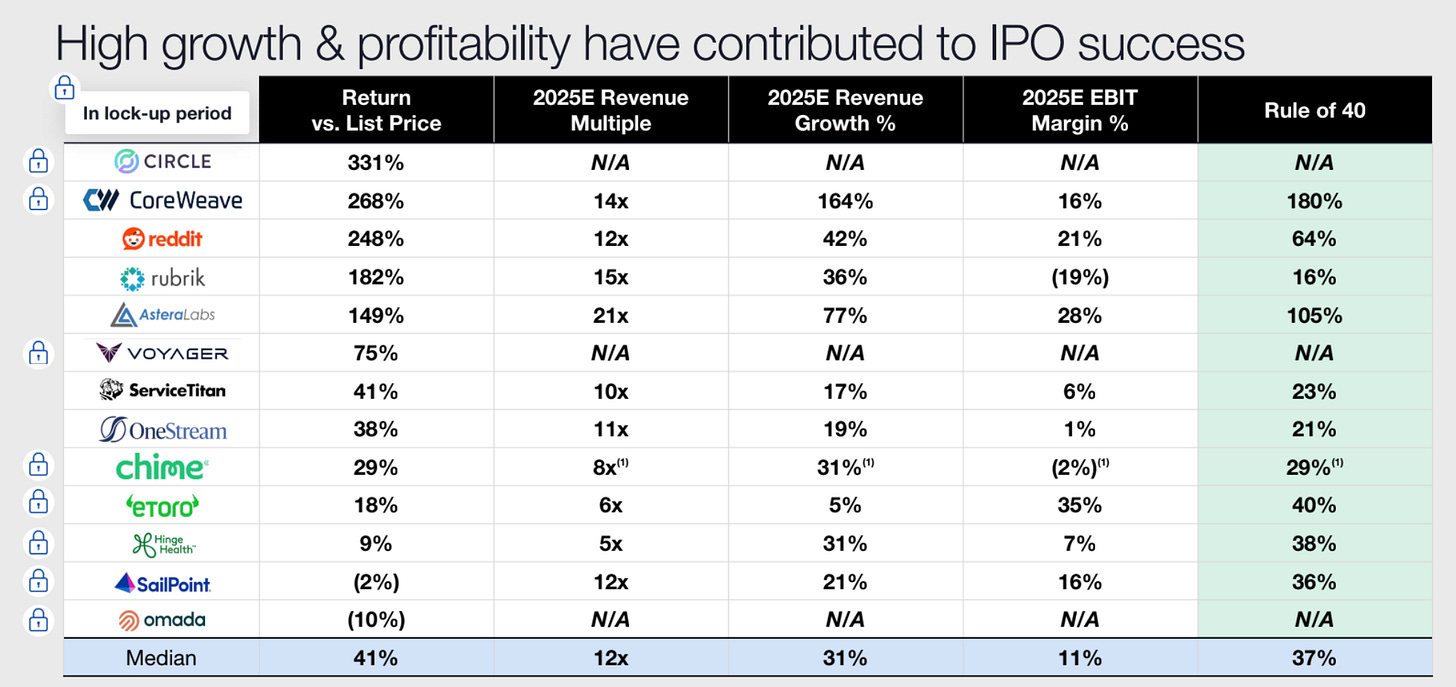

The Coatue EMW slides are always chock full of insights, and the 10th edition didn’t disappoint.

Jobs

Early stage European AI companies in my network are actively looking for talent - if any roles sound like a fit for you or your friends, don’t hesitate to reach out to me.

If you’re exploring starting your own company or joining one, or just figuring out what’s next, I’d love to introduce you to some of the excellent talent in my network.

Reads

The Epoch AI Brief - June 2025

How to fight back against AI tourists

Have any feedback? Email me at akash@earlybird.com.