The Parallel Scaling Law for Language Models

Alibaba's Qwen team delivers a new scaling law

Software Synthesis analyses the evolution of software companies in the age of AI - from how they're built and scaled, to how they go to market and create enduring value. You can reach me at akash@earlybird.com.

Necessity is the mother of invention

The whole world learned about the incredible hardware and software engineering coming out of China when DeepSeek’s models sent markets tumbling:

Here’s the thing: a huge number of the innovations I explained above are about overcoming the lack of memory bandwidth implied in using H800s instead of H100s. Moreover, if you actually did the math on the previous question, you would realize that DeepSeek actually had an excess of computing; that’s because DeepSeek actually programmed 20 of the 132 processing units on each H800 specifically to manage cross-chip communications. This is actually impossible to do in CUDA. DeepSeek engineers had to drop down to PTX, a low-level instruction set for Nvidia GPUs that is basically like assembly language.

DeepSeek’s focus on efficiency is representative of the wider Chinese AI ecosystem’s adaptation to chip export bans, with the big Chinese tech companies heavily investing in foundation models.

Alibaba, in particular, has been making significant strides in recent months.

A few weeks ago, Alibaba released its Qwen 3 family of open-source models under the Apache 2.0 license, ranging from 0.6 billion to 235 billion parameters.

Qwen3 235B-A22B is the most intelligent open-weights model in the market, despite having only 22B active parameters at inference time.

The smaller variants packed a punch, too, delivering impressive reasoning performance relative to their size, ideal for mobile and edge applications. As with other Chinese labs pursuing efficiency, the Qwen models include Mixture of Experts variants that outperform dense models in compute and memory-constrained edge environments.

The resulting models have quickly risen up the Hugging Face Open LLM Leaderboard.

I intend to write more about the innovations coming out of the Chinese labs in the coming months, but there was a particularly striking paper from the Qwen team that caught my eye this weekend: Parallel Scaling Law for Language Models.

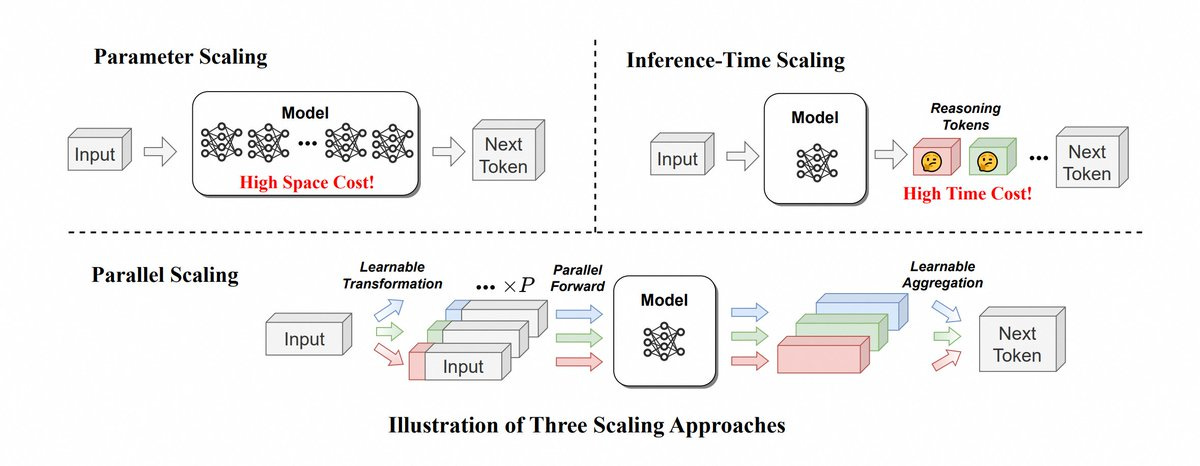

We've discussed the two well-known scaling laws at length: pre-training and test-time compute. These are well understood and hundreds of billions of capex is being deployed to continue scaling along these two vectors on the premise that the Bitter Lesson will continue to hold.

Binyuan Hui and the team proposed PARSCALE, a new parallel scaling law that utilises multiple parallel computational streams instead of scaling parameters, delivering a cost efficient way to scale compute and benefit from test-time ‘thinking’ at low latency.

To put this into perspective, reasoning-heavy workloads like coding would especially benefit from this new scaling law, as these kinds of workloads disproportionately benefit from scaling compute (thinking) rather than parameters (memorisation).

PARSCALE demands up to 22 times less memory and produces up to 6 times less latency to deliver the same performance from scaling parameters.

There are already many ways to make inference more efficient - quantisation, pruning, distillation, and more. PARSCALE, on the other hand, is a genuinely new scaling law that will allow us to scale test-time compute with lower latency. What’s more, bigger models (N) reap proportionally more gains from extra computational streams (P).

Let’s step back and examine some potential second-order effects.

Chips

High-bandwidth memory GPU workloads could concede significant market share to on-device NPU workloads with on-chip SRAM.

If inference workloads increasingly move to the edge, that could also see the enormous data centre build out that’s currently underway primarily supporting training workloads. Inference is inevitably going to be an order of magnitude larger market, which explains some of the mixed signals from Microsoft paring back some of their CoreWeave commitments.

Some winners here would be mobile-centric SoC makers (Apple, Qualcomm, MediaTek), licensable NPU IP (CEVA NeuPro-M, Arm Ethos-U/N) vendors.

Infrastructure and applications

Tools optimising inference at the edge, e.g. model compression, quantisation tools, or runtime optimisation platforms, could see a strong tailwind.

Compute would no longer be a drag on gross margins, even if its already been precipitously declining. This would only further accelerate the venture creation underway as a result of the democratisation of software engineering.

Models could be fine-tuned using on-device data to create more create personalised experiences - that’s clearly how applications/models intend to compete.

This is an exciting new scaling law - the coming months will reveal how much headroom it has to run.

Interesting Data