Inference Time Scaling Laws

AI Megacycle Of System 1 And System 2 Applications

👋 Hey friends, I’m Akash! Software Synthesis is where I connect the dots on AI, software and company building strategy. You can reach me at akash@earlybird.com!

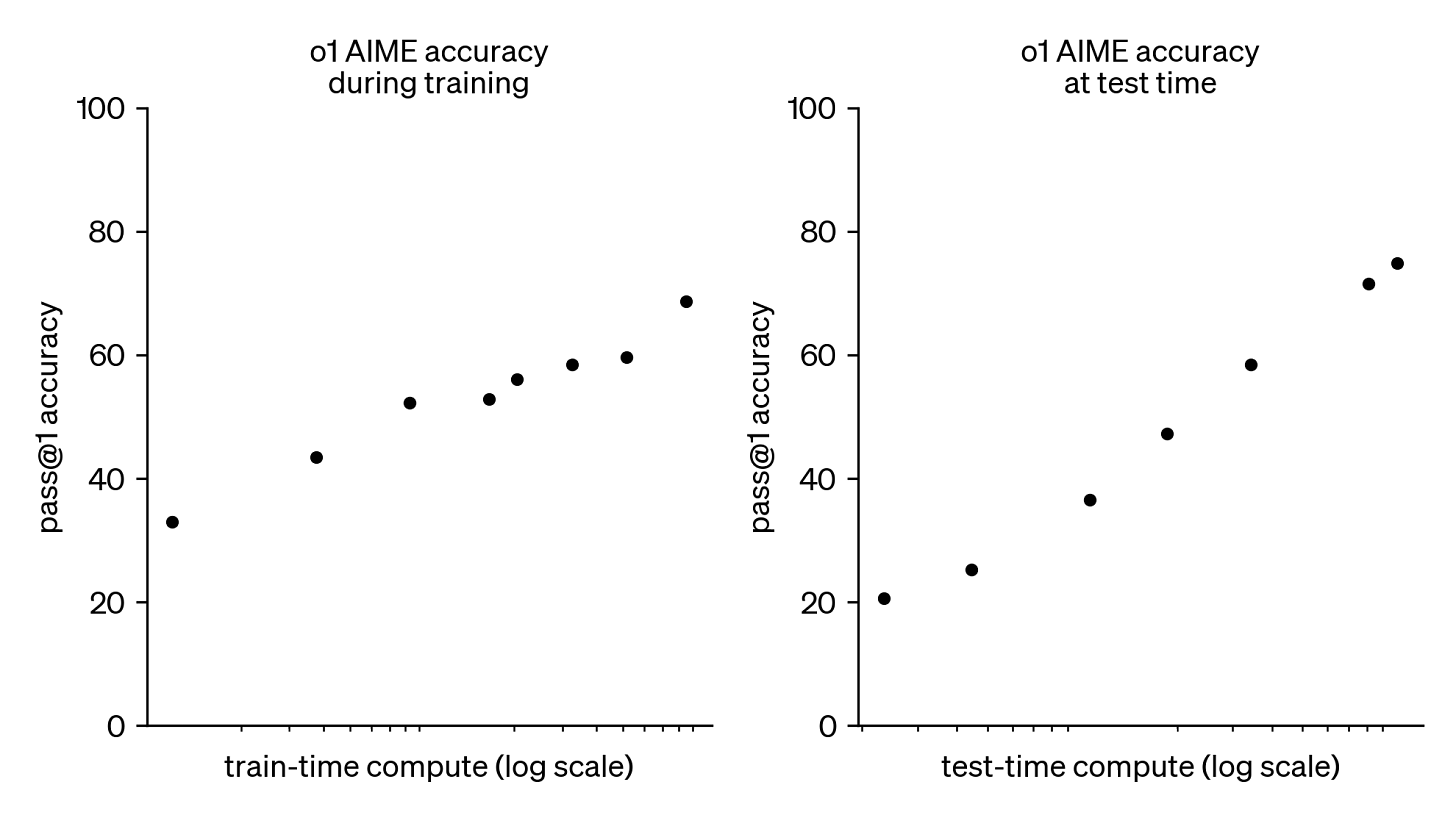

Pre-training scaling laws may soon hit a data wall, but OpenAI’s o1 model has revealed an entirely new scaling law: inference time scaling.

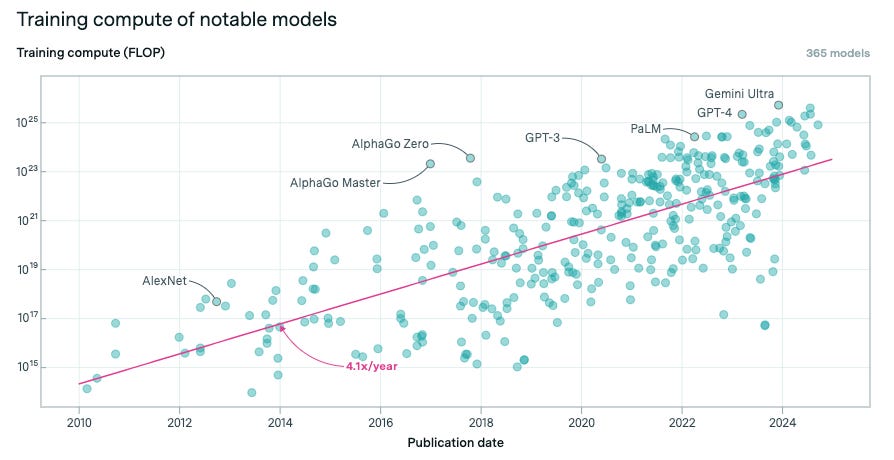

Until very recently, most of the focus in LLM scaling was on pre-training.

With compute capacity used for training doubling every 6 months for the last 14 years.

Despite the hundreds of billions of capex going into AI data centres, we’re likely to hit a data wall in 2027/28.

There’s this big debate in the AI field about what are the rate-limiters on progress, and the scaling purists think we need more scale, more compute. There are people who believe we need algorithmic breakthroughs, and so we are AI researcher limited, and then there are folks who believe we’re hitting a data wall, and what we’re actually gated on is high-quality data and maybe labeled data, maybe raw data, maybe video can provide it.

Short of breakthroughs in architectures, current progress can only be extrapolated for a few more years (even if there would be transformative value in just realising the latent capabilities of frontier models).

That’s why inference-time scaling laws matter.

o1 reallocates compute from pre-training to inference time, allowing it to ‘think’ and reason about problems for longer.

Just like the scaling law for training, this seems to have no limit, but also like the scaling law for training, it is exponential, so to continue to improve outputs, you need to let the AI “think” for ever longer periods of time. It makes the fictional computer in The Hitchhikers Guide to the Galaxy, which needed 7.5 million years to figure out the ultimate answer to the ultimate question, feel more prophetic than a science fiction joke. We are in the early days of the “thinking” scaling law, but it shows a lot of promise for the future.

One way to taxonomise AI capabilities and enterprise use cases is System 1 vs System 2.

‘One way to think about reasoning is there are some problems that benefit from being able to think about it for longer. You know, there’s this classic notion of System 1 versus System 2 thinking in humans. System 1 is the more automatic, instinctive response and System 2 is the slower, more process-driven response.’

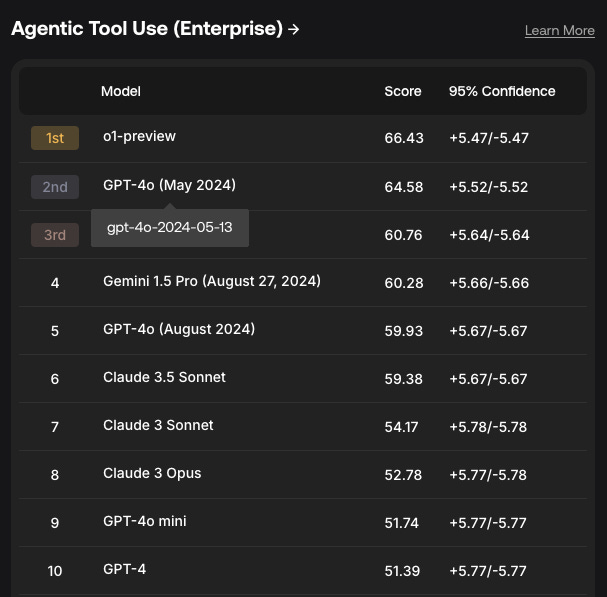

Much of AI’s promise of ‘selling work’ relies on 'agentic’ capabilities like reflection, intelligent function calling, planning. Effectively, reasoning.

Chain of thought and reflection tokens which explicitly instruct a model to follow certain steps in the execution of a task, reflect on the outputs of each step before moving on to the next, and use the right tools to fetch context/knowledge, are how developers have derived agentic capabilities from existing frontier models.

In terms of purely focusing on inference scaling, we’re probably in the GPT-1 era of capabilities.

The thing that remains to be solved is general domain long horizon reliability. And I think you need inference time compute, test time compute, for that. So you’d want—when you try to prove a new theorem in math, or when you’re writing a large software program, or when you’re writing an essay of reasonable complexity, you usually wouldn’t write it token by token.

You’d want to think quite hard about some of those tokens. And finding ways to spend not 1X or 2x or 10X, but a 1,000,000X the resources on that token in a productive way, I think, is really important. That is probably the last big problem.

Even if we scale up inference time compute by the orders of magnitude Eric is implying, it’d still be much cheaper than human workers, as Ben Thompson pointed out:

This second image is a potential problem in a Copilot paradigm: sure, a smarter model potentially makes your employees more productive, but those increases in productivity have to be balanced by both greater inference costs and more time spent waiting for the model (o1 is significantly slower than a model like 4o). However, the agent equation, where you are talking about replacing a worker, is dramatically different: there the cost umbrella is absolutely massive, because even the most expensive model is a lot cheaper, above-and-beyond the other benefits like always being available and being scalable in number.

There’s some early evidence that applying the right RL + CoT techniques can yield similar levels of performance from other frontier models that haven’t fully exploited inference time scaling, likely owing to some hidden chain of thought tokens. In any case, the other labs are undoubtedly investing these new scaling laws and repurposing their compute.

As the other labs collectively channel their resources towards inference time scaling, we’ll see use cases and capabilities flourish. The early high-ROI use cases of code generation and customer service will benefit from system 2 reasoning, becoming capable of replacing more experienced workers. Inference time scaling will also unlock novel system 2 functions like financial planning and analysis, corporate development, and strategy.

The most exciting conclusion is how multiple app <> infrastructure cycles are combining into an AI megacycle, just as previous technology waves were stacked on top of each other to create de novo products and experiences.

Thank you for reading. If you liked this piece, please share it with your friends, colleagues, and anyone that wants to get smarter on startup strategy. Subscribe and find me on LinkedIn or Twitter.