Distribution and data flywheels in AI

Shipping minimum viable quality releases to win markets

Software Synthesis analyses the evolution of software companies in the age of AI - from how they're built and scaled, to how they go to market and create enduring value. You can reach me at akash@earlybird.com.

After the success of our 0 → 1 GTM session, we’ll be hosting a breakfast focusing on the MCP ecosystem in our London office - if you’re a founder or operator working with MCP servers, sign up here.

Vertical integration in AI is a topic again since Anthropic cut Windsurf’s access to its models.

The core argument I made about vertical integration six months ago still holds - the best products get distribution, which begets data that then improves the product.

Search and data flywheels

Cursor CEO Michael Truell likened this dynamic to the search market in the early days of the internet.

Ben Thompson: Is that a real sustainable advantage for you going forward, where you can really dominate the space because you have the usage data, it’s not just calling out to an LLM, that got you started, but now you’re training your own models based on people using Cursor. You started out by having the whole context of the code, which is the first thing you need to do to even accomplish this, but now you have your own data to train on.

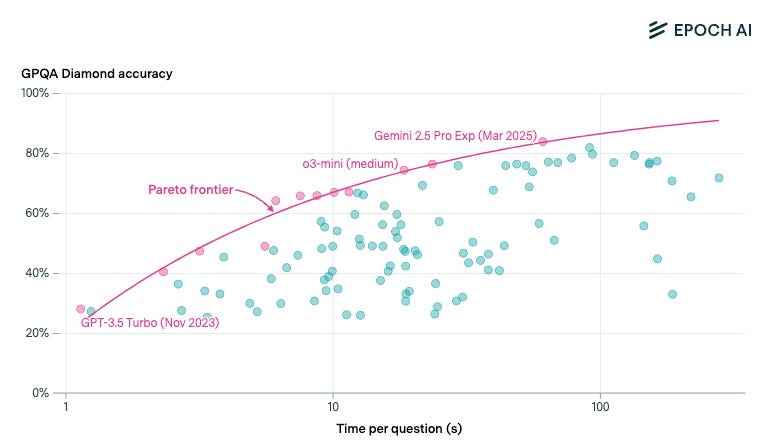

Michael Truell: Yeah, I think it’s a big advantage, and I think these dynamics of high ceiling, you can kind of pick between products and then this kind of third dynamic of distribution then gets your data, which then helps you make the product better. I think all three of those things were shared by search at the end of the 90s and early 2000s, and so in many ways I think that actually, the competitive dynamics of our market mirror search more than normal enterprise software markets.

RL on user behaviour data is the AI-equivalent of network effects.

In fact, having a product that aggregates or collects user behaviour is highly valuable as that is ultimately the most important dataset. One interesting implication of this is that AI startups with user data can RL custom models without needing large compute budgets to generate data synthetically.

Anthropic knew that this dynamic would ultimately work in OpenAI's favour (since its acquisition of Windsurf) - here’s cofounder Ben Mann:

From like a classic startup founder sense of what is important, I felt that coding as an application was something that we couldn't solely allow our customers to handle for us. So, we love our partners like Cursor and and GitHub who have been using our models quite heavily, but the amount and the speed that we learn is much less if we don't have a direct relationship with our coding users.

Anthropic needs revenue from customers like Cursor, but ultimately runs the risk that Cursor will completely commoditise them in time with their data advantage. For now, the two can co-exist, but in the long term, head-on competition is unavoidable. The case of Windsurf is much clearer.

If the competitive dynamics of AI markets are closer to search than classic enterprise software, this has several implications.

Distribution > Technology

Building in AI forces founders to hold two competing ideas at the same time.

On the one hand, AI represents the kind of platform shift that promises to see market share rotate to newer vendors who rebuild products from first principles.

On the other hand, if distribution begets data which results in better products, shouldn’t the incumbents win?

The balance is Minimum Viable Quality.

OpenAI and ChatGPT’s rapid ascent relative to consumer internet giants makes this point.

The version of ChatGPT that was released in October 2022 was simple. Yet, the difference between ChatGPT as a product relative to GPT-3 as an API is what saw the current wave of AI take off.

There’s a reason why everyone is talking about speed.

Companies that ship fast acknowledge that a frequent release cycle of features that meet a minimum viable quality threshold is more conducive to winning market share and data flywheels than the ‘fat startup’ model.

Patrick O’Shaughnessy interviewed Rahul Vohra in 2020 and asked him about this trade-off in the context of Superhuman:

PS: One of my favorite debates in the world of technology entrepreneurship is the debate between sort of the lean model, where you're iterating and failing a lot and learning through customer feedback, and what I'll call the Keith Rabois movie production model, where it more sounds like what you've done, which is big long effort that is almost produced like a movie would be, with actors and a script and everything else, and you go to market with an incredible product, not one that's sort of been iterated through a crowd.

RV:

For context, in the summer of 2015 we started much like any other software company, by writing code. In the summer of 2016, we were still coding. In the summer of 2017, we were still coding. I felt this incredible, intense pressure to launch.

Here we were two years in, and we still had not launched. But deep down inside, I knew, no matter how intensely I felt that pressure, that a launch would go very badly. I did not believe that we had product to market fit.

So in April of 2017, I started my search for that Holy Grail. For a way to define product market fit, for a metric to measure product to market fit, and for a methodology to systematically increase product to market fit. That's actually how I came across my product to market fit engine, which is how many people know of me. It's actually a blend between the lean startup model and the Keith Rabois fat startup model, or the movie production model.

I'm actually a big believer in both, and I would say we sit somewhere in the middle. I would say, raise a tremendous amount of capital up front. I would say, take your decisions as long term as you can. I would say, don't put out a minimum viable product, put out a maximally delightful product. But I would also say, listen very closely to your users.

Would Superhuman’s approach work today, with the velocity of the AI landscape and the engineering productivity we’re seeing? Probably not.

The classic vectors of defensibility for enterprise software have been integrations and switching costs. But if AI apps are more like search engines, becoming the client where end-users spend hours doing work is the most valuable position in the AI stack.

Librechat and the future of Clients

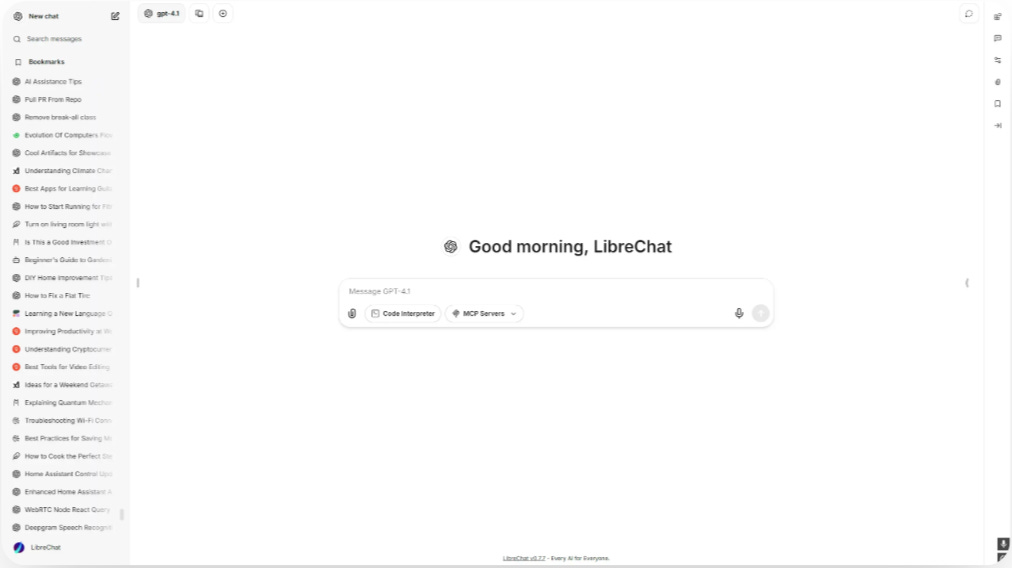

LibreChat is an open source alternative to ChatGPT that’s currently trending as the number one repo of the day.

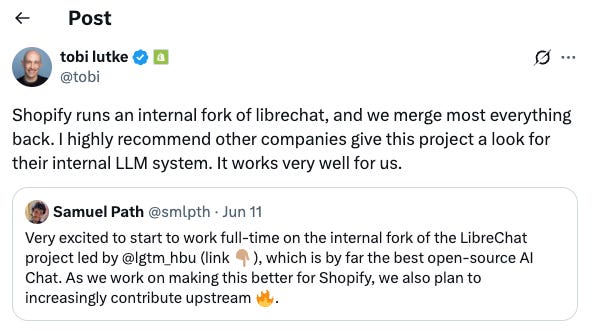

Developers access multi-model routing, agents, code interpreter, tool calling and increasingly enterprise-grade features (e.g. auth, SSO, etc) as companies like Shopify are actively contributing to its development by forking librechat and merging improvements back upstream.

The flywheel that we discussed earlier depends on who owns the end user and in turn their behaviour data.

LibreChat threatens to disintermediate clients like ChatGPT and Claude in the enterprise and help them establish their own data flywheels.

The age of customized models for enterprises may make sense if those enterprises can set up the correct RL environments. Fine-tuning models for enterprises has generally failed versus the relentless march of foundation models.

Run it in production, flip on full logging, and you have the same foundation for a proprietary data flywheel that clients like Claude, Cursor and ChatGPT are chasing, only targeted at whatever domain your enterprise cares about.

This is especially true in a post-MCP world where users spend less time switching between apps and do more of their work in one pane of glass.

The implication of this would be more small language models in the enterprise, fewer ChatGPT seats, and a more even distribution of API calls across different models.

For now, LibreChat only threatens clients that have a simple chat interface. But over time, they could also begin to encroach on other clients that are embedded in workflows. That is likely to happen sooner rather than later as more enterprises work on their own internal forks.

Data

Jobs

Companies in my network are actively looking for talent - if any roles sound like a fit for you or your friends, don’t hesitate to reach out to me.

If you’re exploring starting your own company or joining one, or just figuring out what’s next, reach out to me - I’d love to introduce you to some of the excellent talent in my network.

Reads

Building AI products with Zep AI

The Rise of Systems of Consolidation Applications

Building an Outlier Firm: What Makes Amplify Different

Have any feedback? Email me at akash@earlybird.com.

This is a fake BTC. Reported for impersonation