Building Enterprise AI: Databricks' Chief AI Officer Maria Zervou

Governing Agents In Production

Software Synthesis analyses the evolution of software companies in the age of AI - from how they're built and scaled, to how they go to market and create enduring value. You can reach me at akash@earlybird.com.

Gradient Descending Roundtables in London:

Last week, we hosted Maria Zervou, Chief AI Officer EMEA at Databricks for a Gradient Descending roundtable.

Databricks’ phenomenal growth at scale (50% YoY, $4bn run-rate, 140% NDR) underlines its position as the leading platform for enterprises to deploy AI.

The roundtable covered a lot of ground, with the below key takeaways:

Data Intelligence vs. General AI

Databricks’ approach is distinct from generic LLM applications:

General AI: Using OpenAI/Anthropic models with prompt engineering alone

Data Intelligence: Grounding AI in proprietary data with robust governance

Competitive advantage comes from data access, not just model selection

Three Pillars of Databricks’ AI Platform

Governance (Unity Catalog)

Centralised permissions for data, models, and tools

End-to-end lineage tracking from data → application → end user

Critical for EU compliance and financial services

Open source governance layer

Grounded AI

Opening data/tools to agents in controlled ways

Python tools, MCP servers, SQL access, vector stores

Permission propagation from end user to data layer

Flexible AI

Partnership with all major cloud providers, OpenAI, Anthropic

Support for all open-source models (including Chinese models via self-hosting)

Model routing capabilities for cost/performance optimisation

The Evaluation Challenge

Current State:

Customers don’t know which models work best for specific tasks

Databricks’ Solution:

Custom “judges” (fine-tuned models for specific evaluation tasks)

Out-of-box judges for: grounding, safety, bias

MLflow-based evaluation framework (open source)

Version tracking and A/B testing capabilities

SME labelling sessions for continuous improvement

What’s Happening In The Field

Potential Reasons For The MIT “95% Failure Rate” Report:

Primary reason: AI products cannot log, trace, understand, or prove value

Lack of end-user involvement in development process

Insufficient hands-on support and change management

Real-World Agent Limitations:

Multi-step execution: Maximum ~5 steps in production

Steps are templatised and controlled, not free-form

Financial services require predefined workflows with reasoning at each step

Human-in-the-Loop is Reality:

Automation dream vs. reality disconnect

Even with reasoning capabilities, manual approval required

Example: Credit limit increases still need human sign-off

Fine-tuning?

Not much adoption despite initial hype

Reasons:

Maintenance burden (retraining, drift monitoring)

Unclear ROI vs. larger base models

Only justified for specific industry vocabulary or behaviour constraints

Example: Medical app - never give advice, only facilitate conversation

Use Case Evolution

Three Tiers of AI Adoption:

Productivity Improvements (2023-2024)

Copilots for individual users

Incremental efficiency gains

Process Automation (Current focus)

Templatising business processes

Agent-driven workflow automation

Scaling human tasks

Business Model Innovation (Emerging)

Complete rethinking of value propositions

Example: Behavioural assessment company → AI-powered team formation and task assignment platform

Represents genuine innovation beyond productivity

Agent Development: Different Approaches With Databricks

1. Full Hands-On Development

Complete control over agent behaviour

For complex, custom requirements

Requires engineering expertise

2. Agent Bricks (Semi-Hands-On)

Pre-built components:

Information extraction

Knowledge assistant (RAG)

AI BI Genie (text-to-SQL)

Customer LLM (fine-tuning)

Multi-agent supervisor

Still requires understanding of extraction processes and evaluation

Not for non technical business users

GTM Dynamics

Historical Approach (Pre-2024):

Engineering-focused, bottom-up sales

Build POCs/MVPs with engineering teams

Blocked at large organisations without C-suite buy-in

Current Approach:

Top-down + bottom-up simultaneously

FDE (Forward Deployment Engineers) for hands-on work

C-suite positioning for change management

Industry roundtables for peer learning

What Works:

Bringing customers together to share implementations

“Customers don’t want to be left behind”

Success tracking: New use cases 1-2 months post-event

Digital natives adopt much faster than traditional enterprises

Data Marketplace

Delta Sharing:

Structured data exchange between companies

Use case examples:

Retail: Suppliers ↔ Supermarkets

Travel: Airports ↔ Airlines

Enables better collaborative models without competitive compromise

Solution Marketplace:

Customers can sell bespoke agents to other companies

Example: Travel industry agent

Revenue stream for customers

MCP Integration

Databricks has made a large investment in MCP support

Auto-release of tools as MCP servers (text-to-SQL, similarity search)

Bringing external MCPs into Unity Catalog with permission management

Planning MCP/agent marketplace

External MCP usage: Expect usage-based charging similar to APIs

Data

Coatue’s updated Fantastic 40

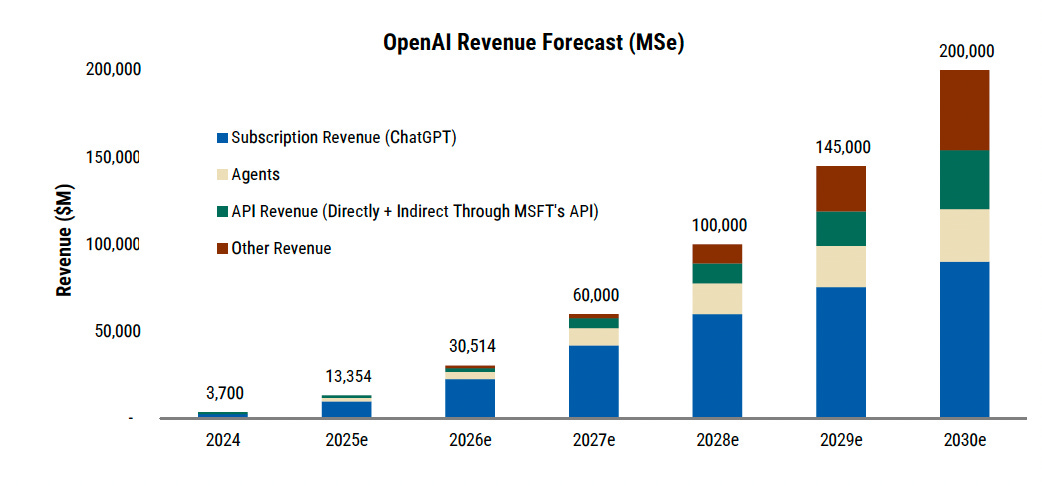

OpenAI’s ramp is unprecedented by a mile, dominated by ChatGPT

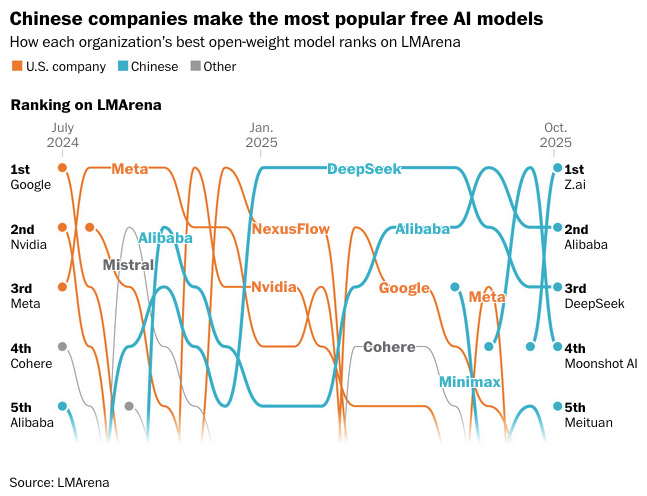

Reflection AI might change things, but clear that a large % of AI ecosystem now reliant on truly open models from China

Reading

The Data Source #28: Mapping out the Future of Compute 🌎

Building in the AI Era: The HeyGen Way

The Hard Way Pays Off: Inside Sierra’s Design Partner Strategy

Seeing Science Like a Language Model

Have any feedback? Email me at akash@earlybird.com.